WebSockets vs SSE vs HTTP: Trade-Offs You Only Notice in Production

Real-time systems rarely fail because of missing features. They fail because of architectural decisions made too early.

WebSockets vs SSE vs HTTP: Trade-Offs You Only Notice in Production

At small scale, real-time communication looks deceptively simple.

A request goes in.

A response comes out.

Updates feel instant.

At 100 users, HTTP polling works.

At 1,000 users, SSE feels elegant.

At 10,000 users, WebSockets feel inevitable.

And at 100,000 users architecture decisions start billing you.

This article isn’t about what WebSockets, Server-Sent Events (SSE), or HTTP are.

It’s about where they break, why teams regret choices, and what trade-offs only appear in production.

The Illusion of “Real-Time”

Early-stage systems create a dangerous illusion:

“Everything is fast. Everything is stable. Why not just use WebSockets everywhere?”

Because real-time is not a feature it’s a cost model.

Latency, memory, connection state, failure recovery, and horizontal scaling all compound over time.

Choosing the wrong transport doesn’t fail loudly.

It fails slowly, under load.

The Three Communication Models (Quick Reality Check)

Before diving into trade-offs, let’s align on the models — briefly.

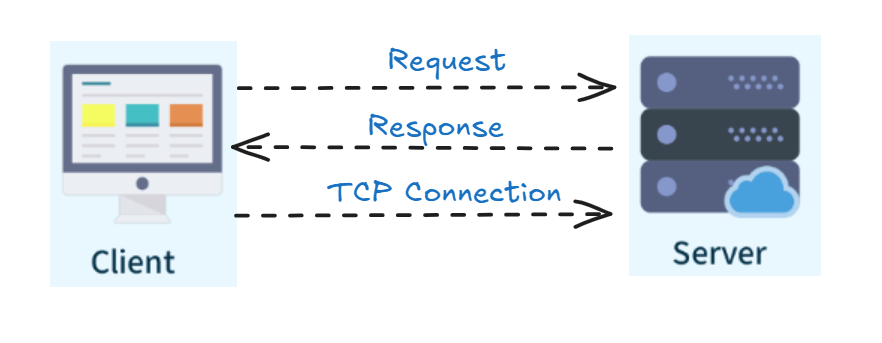

HTTP (Request–Response)

- Stateless

- Client-initiated

- Easy to cache, debug, and scale

Server-Sent Events (SSE)

- Long-lived HTTP connection

- Server → Client only

- Simple push semantics

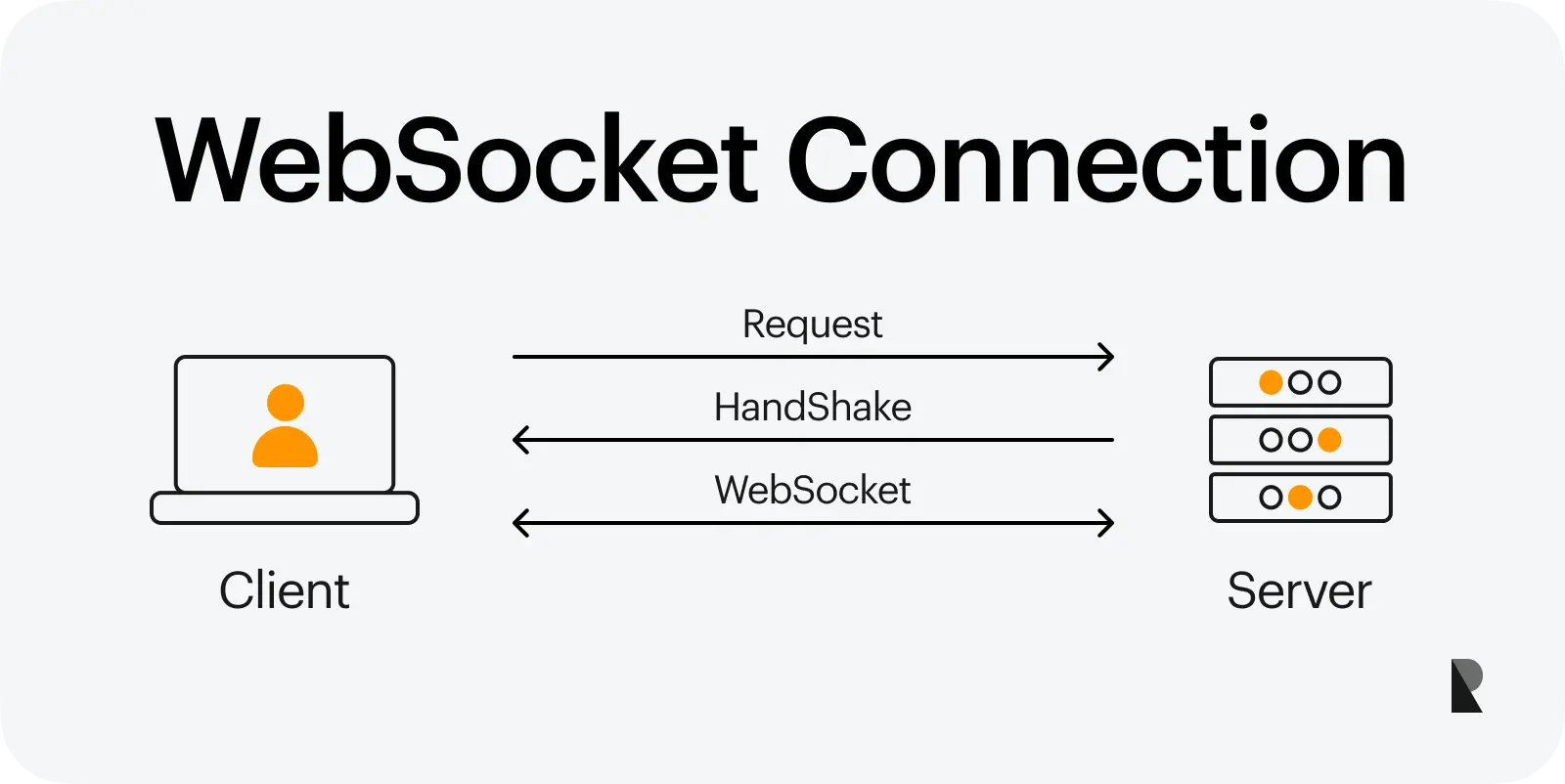

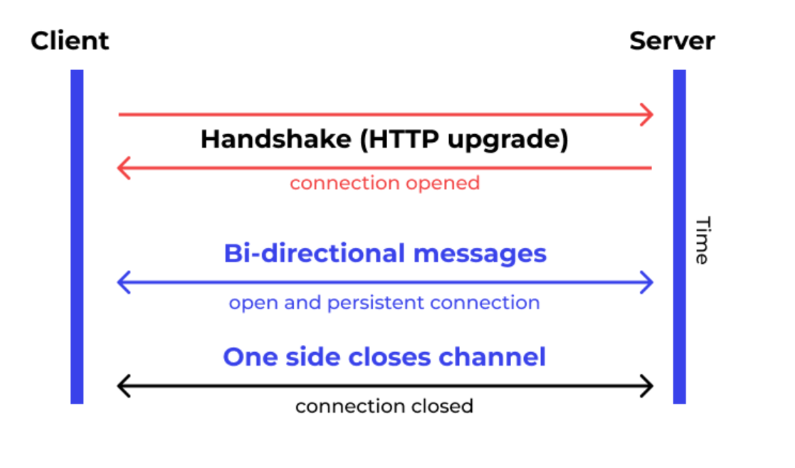

WebSockets

- Persistent, full-duplex connection

- Client ↔ Server

- State-heavy and powerful

At a glance, they look like progression.

In production, they behave very differently.

The Comparison That Actually Matters

| Dimension | HTTP | SSE | WebSockets |

|---|---|---|---|

| Connection type | Stateless | Long-lived | Persistent |

| Direction | Client → Server | Server → Client | Bi-directional |

| Server state | None | Minimal | High |

| Infra complexity | Low | Medium | High |

| Horizontal scaling | Trivial | Manageable | Hard |

| Cost per client | Low | Medium | High |

The more “real-time” you go, the more state leaks into your infrastructure.

Where Plain HTTP Quietly Wins

HTTP is often dismissed too quickly.

In production, HTTP is still the most reliable transport for:

- Authentication flows

- Idempotent operations

- Low-frequency updates

- Cacheable data

- CDN-backed APIs

If your update frequency is less than once per second, WebSockets are often unnecessary overhead.

HTTP scales not because it’s fast but because it’s stateless.

Stateless systems fail gracefully.

SSE: Elegant, Useful, and Dangerous at Scale

SSE feels like the perfect middle ground.

And for many use cases, it is.

Where SSE shines

- Notifications

- Live dashboards

- Streaming logs

- One-way event feeds

Where SSE hurts

- Browser connection limits

- Proxy and load balancer timeouts

- Limited backpressure control

- One-directional only

SSE works beautifully until fan-out increases.

At scale, every open connection becomes a silent cost:

- Memory

- File descriptors

- Load balancer pressure

SSE doesn’t fail instantly.

It degrades quietly.

WebSockets: Power with a Price Tag

WebSockets unlock true real-time systems.

They also introduce problems HTTP never had.

What WebSockets demand from you

- Connection lifecycle management

- Heartbeats and liveness checks

- Reconnection logic

- Backpressure handling

- Message ordering

- State synchronization

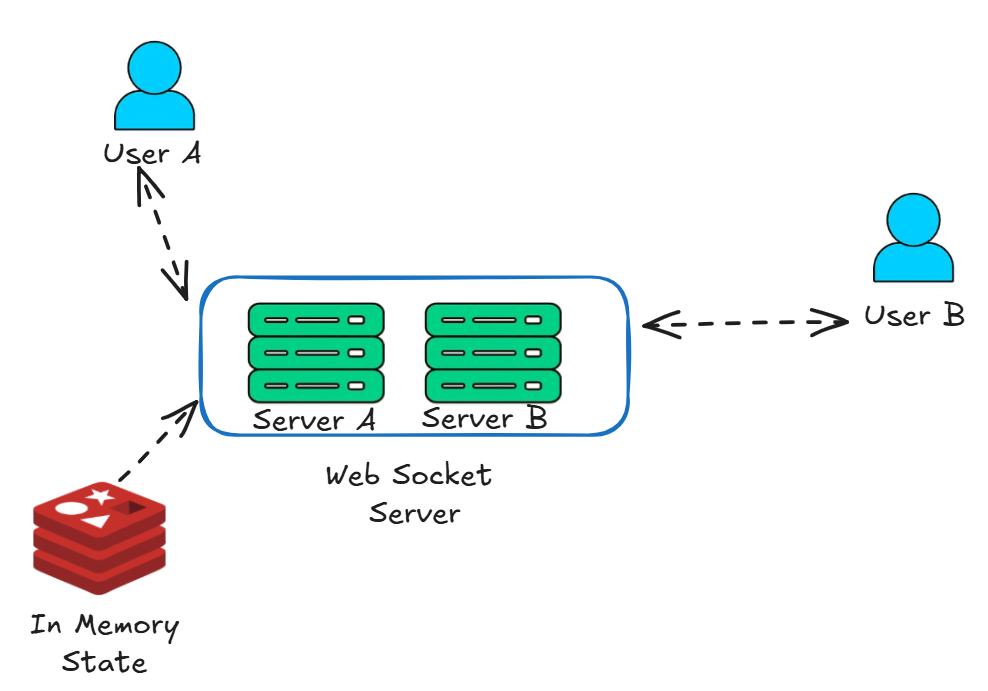

Scaling pain nobody mentions early

- One socket = one stateful client

- Horizontal scaling requires Pub/Sub

- Sticky sessions or connection routing

- Memory grows linearly with users

WebSockets don’t scale horizontally by default you have to design them to.

Without Redis, Kafka, or a message broker, multi-node WebSocket systems break immediately.

The Hidden Cost: Failure Becomes Real-Time Too

Real-time systems don’t just deliver messages faster.

They deliver:

- Bugs faster

- Memory leaks faster

- Cascading failures faster

A bad deploy on HTTP affects requests.

A bad deploy on WebSockets affects every connected user instantly.

Your blast radius increases.

A Practical Decision Framework

Instead of asking “Which is better?”, ask:

Choose HTTP if:

- Updates are infrequent

- Data is cacheable

- Simplicity matters more than latency

- You want easy observability

Choose SSE if:

- You only need server → client updates

- Fan-out is moderate

- You want simpler infrastructure than WebSockets

Choose WebSockets if:

- You need true bi-directional communication

- Latency must be consistently low

- You can invest in infra and ops maturity

- You’re ready to manage state at scale

Real-time is not binary.

It’s a spectrum.

The Hard Truth

Choosing WebSockets doesn’t make your system real-time.

It makes your failures real-time too.

Production systems aren’t defined by how fast they work when everything is healthy but by how predictably they fail when things go wrong.

Pick the simplest transport that survives your scale.

Everything else is accidental complexity.

Production Lessons

Most systems don’t fail because of missing features.

They fail because of premature architectural ambition.

Understand the trade-offs.

Respect state.

Design for failure.

That’s where real engineering begins.